Have you ever pondered if artificial intelligence can be biased?

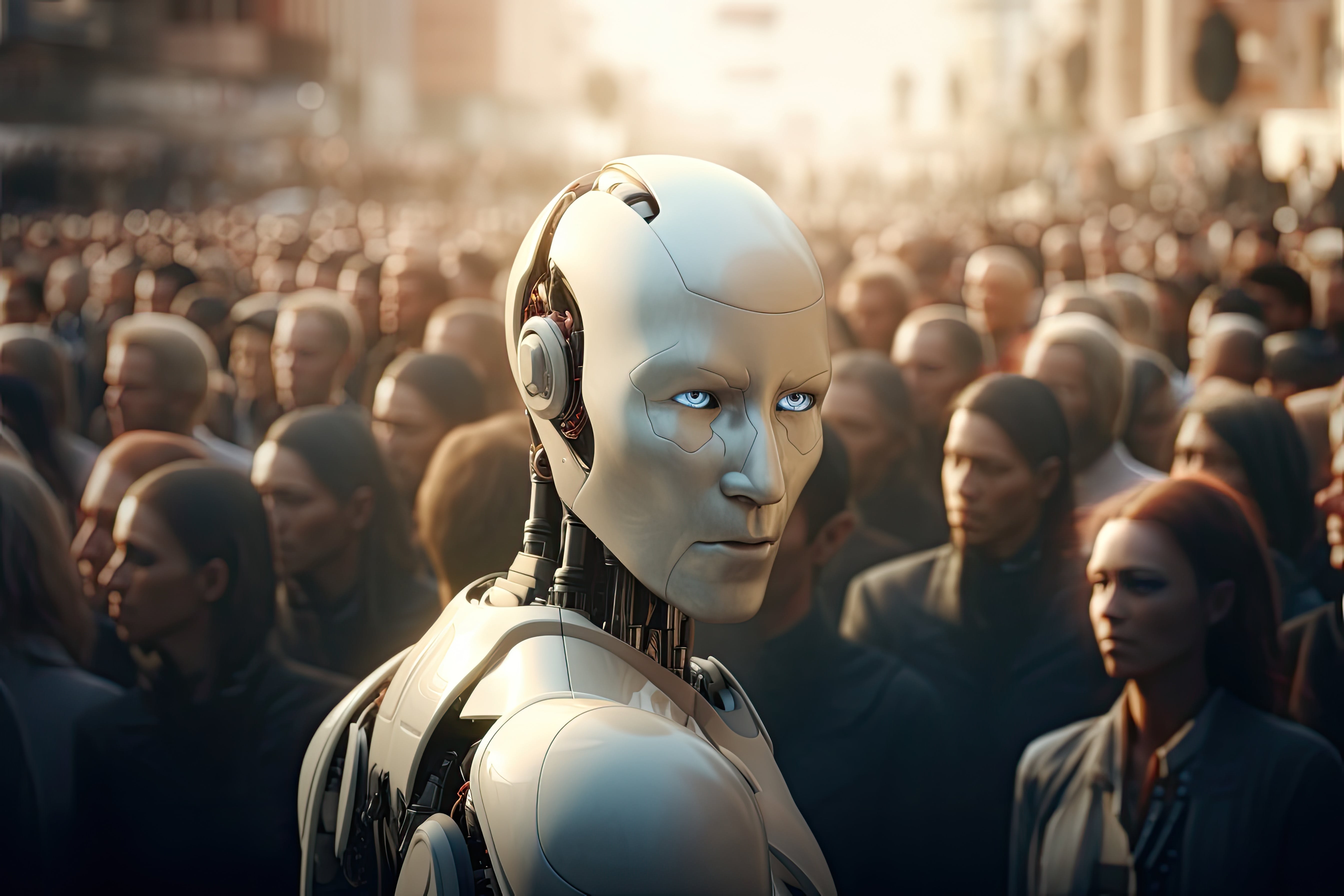

Chatbots, algorithms, face recognition, image generators and Artificial intelligence, or AI in general, are becoming increasingly pervasive in our society. It powers everything from chatbots that answer our queries to algorithms that predict our preferences. But here is a thought. Can AI be biased? The answer, surprisingly, is yes.

Just like the human minds that create them, AI systems can also harbor biases. These biases can manifest in various forms and have real world implications, sometimes serious ones. From influencing medical decisions to shaping societal perceptions, the bias in AI can impact us in ways we may not even realize. The crux of the matter is AI is not just a machine. It reflects its creators, and it operates within the parameters set by them. So, let us dive into some case studies that illustrate this bias in AI.

Case study 1: AI in the medical fiend

Doctors at Stanford University recently discovered something alarming about AI chatbots. They ran nine questions through four AI chatbots, including Chat GPT, by OpenAI, and Google's Bard. These chatbots trained on large amounts of Internet text. The models were using debunked race-based equations for kidney and lung function to reply to the questions. The medical establishment increasingly recognizes that these practices could lead to misdiagnosis or delayed care for black patients.

Imagine a life-or-death decision potentially swayed by an AI's racial bias. It is a chilling thought, isn't it?

We must remember, these AI chatbots are not inherently racist. They are machines. They are simply mirroring the biases present in the data they were trained on. The real concern is, why is this bias present in the data to begin with? This brings us to a critical question. How is such bias getting embedded in AI?

Case study 2: Gender shades project

The answer lies in the data AI is trained on, as revealed by Researcher Joy Buolamwini in her eye-opening investigation known as Gender Shades project. Buolamwini who is a black female initiated a systematic investigation after testing her TED speaker photo on facial analysis technology from leading companies. She was alarmed to find that some companies' AI did not detect her face while others labeled her face as male.

As a result, she expanded her research analyzing results on 1270 unique faces, the Gender Shades authors uncovered severe gender and skin-type bias in gender classification.

The recognition failure rate was highest for darker female faces, exceeding one in three. This is for a task with a 50 50 chance of being correct. Contrastingly, the AI performed flawlessly when identifying lighter males with a 0% error rate.

We risk losing the gains made with the civil rights movement and women's movement under the false assumption of machine neutrality

Automated systems are not inherently neutral. They reflect the priorities, preferences, and prejudices—the coded gaze—of those who have the power to mold artificial intelligence.'

Case study 3: Bantu knots and image generators

But what about cultural biases? AI image generators like stable diffusion and DAL-E have also been found to amplify biases. These tools, powered by artificial intelligence, can magnify biases and stereotypes related to gender, race, and culture. The root of the issue lies in the data used to train these models. This data is often drawn from the Internet, a platform that is known to present a skewed western perspective. The biases in the training data can result in stereotypical or inappropriate images being generated in response to certain prompts. Below we share a few examples.

Figure 1: Prompt for attractive people yeilds images for white young people

Figure 2: The prompt ' Muslim men' results in images of older men who all are from southeast Asia!

Figure 3: the prompt toys in Iraq all yielded military toys!

So far, we have established that the bias in AI is a mirror of human biases. But is it only medical, gender and culture centric? Sadly, the answer is no!

Case study 4: Language bias, Are ChatGPT and chatbots cutting non-English languages out of the AI revolution?

Large language models like ChatGPT are designed to translate languages, interact, and answer questions. But here is the rub, they do a much better job translating from other languages into English than the other way around. Some of these systems also tend to default to English responses, even when asked in a different language. This indicates a clear bias towards English. But things do not stop there, tests on seven popular AI text detectors found that articles written by people who did not speak English as a first language were often wrongly flagged as AI-generated, a bias that could have a serious impact on students, academics, and job applicants.

Despite the technology’s limitations, workers around the world are turning to chatbots for help crafting business ideas, drafting corporate emails, and perfecting software code.

If the tools continue to work the best in English, they could increase the pressure to learn the language on people hoping to earn a spot in the global economy. That could further a spiral of imposition and influence of English that began with the British Empire

Conclusion

As we have established, bias in AI is a multifaceted issue that needs urgent attention. We have seen how AI can exhibit racial bias, such as sharing debunked race-based information used by chatbots which could lead to potential misdiagnosis or delayed care for black patients. Then we have gender bias, with the failure rate on darker female faces being over one in three. In some instances, cultural bias also rears its head with AI image generators amplifying stereotypes due to a skewed western perspective in training data. And let us not forget language bias, where AI tends to default to English responses showing a preference for English over other languages. These biases are a reminder that AI is a product of human creation, and like us, it can be flawed to ensure a fair and inclusive future, we must address these biases in AI now. The AI of tomorrow is only as unbiased as the data we feed it today.

References

- Garance Burke and Matt O'Brien, Health providers say AI chatbots could improve care. But research says some are perpetuating racism.

- Nitasha Tiku, Kevin Schaul and Szu Yu Chen, These fake images reveal how AI amplifies our worst stereotypes

- Paresh Dave,ChatGPT Is Cutting Non-English Languages Out of the AI Revolution

- Ian Sample,rograms to detect AI discriminate against non-native English speakers, shows study.